MODELSCAN

Protect Models From Attacks

ModelScan is the pioneering ML Model scanner in the industry, capable of analyzing a wide range of formats. It meticulously checks for any potentially unsafe code, helping ensure the deployment of secure AI solutions. Fully open-source and available at no cost, it’s designed to prioritize safety without any barriers.

OUR APPROACH

DETECT, RESPOND, & INNOVATE

Rakfort’s Model Scanner allows you to secure your digital supply chain before deployment, reducing the risk of adversarial code on your network. With the Model Scanner, you can identify and address potential risks, ensuring a safe and trusted environment.

Key Features

-

Supports Leading Model Formats

Guardian supports popular frameworks such as Pytorch, TensorFlow, XGBoost, Keras, and more.

-

Secure Gateway

Secure your ML model supply chain with Guardian’s endpoint, which captures and verifies the MLOps team’s model requests before delivery.

-

Policy Engine

Guardian includes built-in policies for model acceptance or rejection, enforcing checks on the model’s origin, malicious code, safe storage formats, OSS licensing, and more.

-

Automated Scanning

Integrate Guardian’s Scanner API into your development and deployment pipelines to scan models before distribution, ensuring they are safe to use.

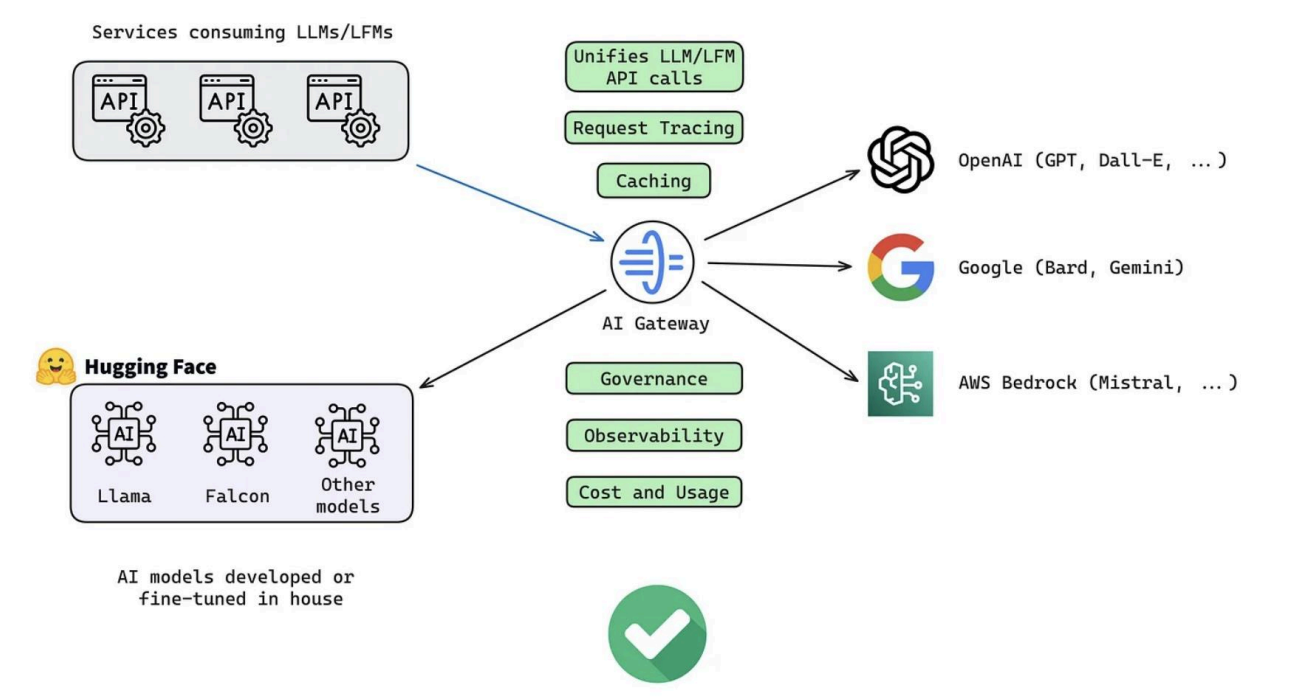

Features of LLM Gateway

- Unified Access: LLM gateways provide a single, unified interface for accessing multiple LLMs, simplifying interactions and reducing the need to manage various APIs or services separately.

- Authentication and Authorization

- Centralised Key Management: Distributing core API keys can be risky; the Al Gateway centralizes key management, giving each developer their own API key while keeping root keys secure and accountable through integration with Secret Managers like AWS SSM, Google Secret Store, or Azure Vault.

- Role-Based Access Control (RBAC): LLM gateways enforce security policies through RBAC, ensuring that only authorized users have access to certain models or functionalities.

- Performance Monitoring: Continuous monitoring of model performance, including latency, error rates, and throughput, allows organizations to ensure that models are operating as expected and to identify issues early.

- Usage Analytics: Detailed analytics on how models are being used, who is using them, and in what context help organizations optimize resource allocation and understand the impact of their Al initiatives.